Re:Staking Weekly #9

Why EigenLayer Bets on Agents, New ARPA Rewards, Renzo Adopts bzSOL, and Jito's Deposit Cap Removal

Welcome to issue 9 of Re:Staking Weekly!

In a bold move right after the Christmas break, EigenLayer surprised the crypto world by launching an aggressive campaign focused on AI agents. This pivot into a nascent sector has left many scratching their heads - what's driving this strategic bet?

In this issue, we dissect EigenLayer's AI strategy and its implications for the restaking ecosystem. We'll also cover developments across the space: Opacity AVS's Eliza.os plugin, Arpa's third rewards campaign, and updates from Renzo and Jito, including their new restaking token and the removal of deposit caps.

Let's dive in!

EigenLayer’s Bet on AI Agents

I initially had a hard time piecing together why EigenLayer would allocate seemingly their entire business resources to this trendy new vertical.

Certainly, everyone is talking about it, trading AI meme coins, and evangelizing a future where these agents replace the majority of the workforce.

But as a vertical this new, the use cases are scarce, the user count is low, and the future remains uncertain.

What's the catch? Why would EigenLayer, the trendsetter and innovator who promised us a world where myriad services can be bootstrapped with day-1 decentralization and economic security, be so interested in an area seemingly so distant from its core?

Agents Architecture

We will have to start with the architecture.

A typical AI agent is a language-based system (i.e., the inputs are natural language) that can handle specific tasks. Architecturally speaking, it consists of two core components:

The Brain: a fine-tuned large language model that handles reasoning and decision-making processes. In actual practice, the agent typically calls a model API from providers such as OpenAI or services such as Cloudflare.

The Actions: A set of pre-programmed scripts that the agent can perform, with specific trigger criteria being applied to each action. Examples would be a user input "execute BTC trade" or "post a tweet," and the agent would call pre-programmed APIs to execute the command.

There are also other key components, such as character definition (e.g., you want your agent to talk like Snoop Dogg), database or memory system to store the current conversation, and so on.

Decentralized vs. Verifiable

The architecture of the AI agents bothered a lot of people, including me.

You see, the actual piece of code that runs on the machine basically handles API calls - calls to the model, and then calls to the action-scribed API. But because the LLM's return is not deterministic, with the same input from the user you never get the same answer back, common consensus fails in this setting. You can't run the same input across multiple devices and cross-verify each other's results because they are all different. In short, there's no point in decentralizing AI agents.

However, agents do need to be verified. You do want to make sure that the agent's output is actually a result from your query. And you do want to make sure the actions commanded by you are actually being executed.

Where’s the Stake

An important part of restaking is its ingenious exploitation of tokenomics between the AVS, operators, and restakers. Restakers contribute stake for security and get rewarded for their contribution.

Nonetheless, as of now, for AI agents, we're still in the stone age of AI token development, where most agent projects are simply launching tokens without real utility.

For restaking to work properly, agents need both financial infrastructure and revenue streams. This means developing treasury modules to manage funds and establishing clear paths to generate revenue. However, we're still quite early in making meaningful footholds in these critical components.

A Speculation on EigenLayer’s vision

A bet on usage: This is a significant issue for our industry. With EigenLayer's ecosystem primarily consisting of middlewares, adoption for AVS is and will be a major roadblock. However, the collaborations with open-source agent frameworks, such as AI16Z, and letting AVS build action plugins, create an intuitive user interface for all of the AVS. Users can simply tell an agent to "run my transaction first using Predicate to check validity, then process it on Solana." The adoption burden is shifted, in part, to the middleware provider, while UX and DX becomes as natural as conversation.

A bet on volume: This is step two, but importantly, all agents can be AVS. They don't have to be, but they can. As mentioned earlier, agents don't need decentralization - they can operate on a single machine. However, they can also be parallelized based on demand, allowing an agent AVS to live autonomously in perpetuity. The bottleneck here is token integration. The key questions remain: Will agents manage their own treasuries, and will this become a standard?

I've wrestled with whether betting on AI means abandoning the original restaking vision. After some thinking, I don't think so. From first principles, crypto and web3 need modular middleware and these middlewares need both a composable Proof-of-Stake system and users. EigenLayer seems to want to use AI Agent to kill two birds with one stone.

Agents give restaking two convenient edges: improved adoption potential over current AVS and increased volume potential in future paradigms. The engineering roadmap appears fairly straightforward. However, just like everything in crypto, the key lies in solving agent tokenomics - we'll have to watch how this develops further.

Read more:

A list of AI agent tools

Opacity’s action plugin on Eliza.os

Summary of EigenLayer’s AI movement in the past week from Soubhik

News Bites

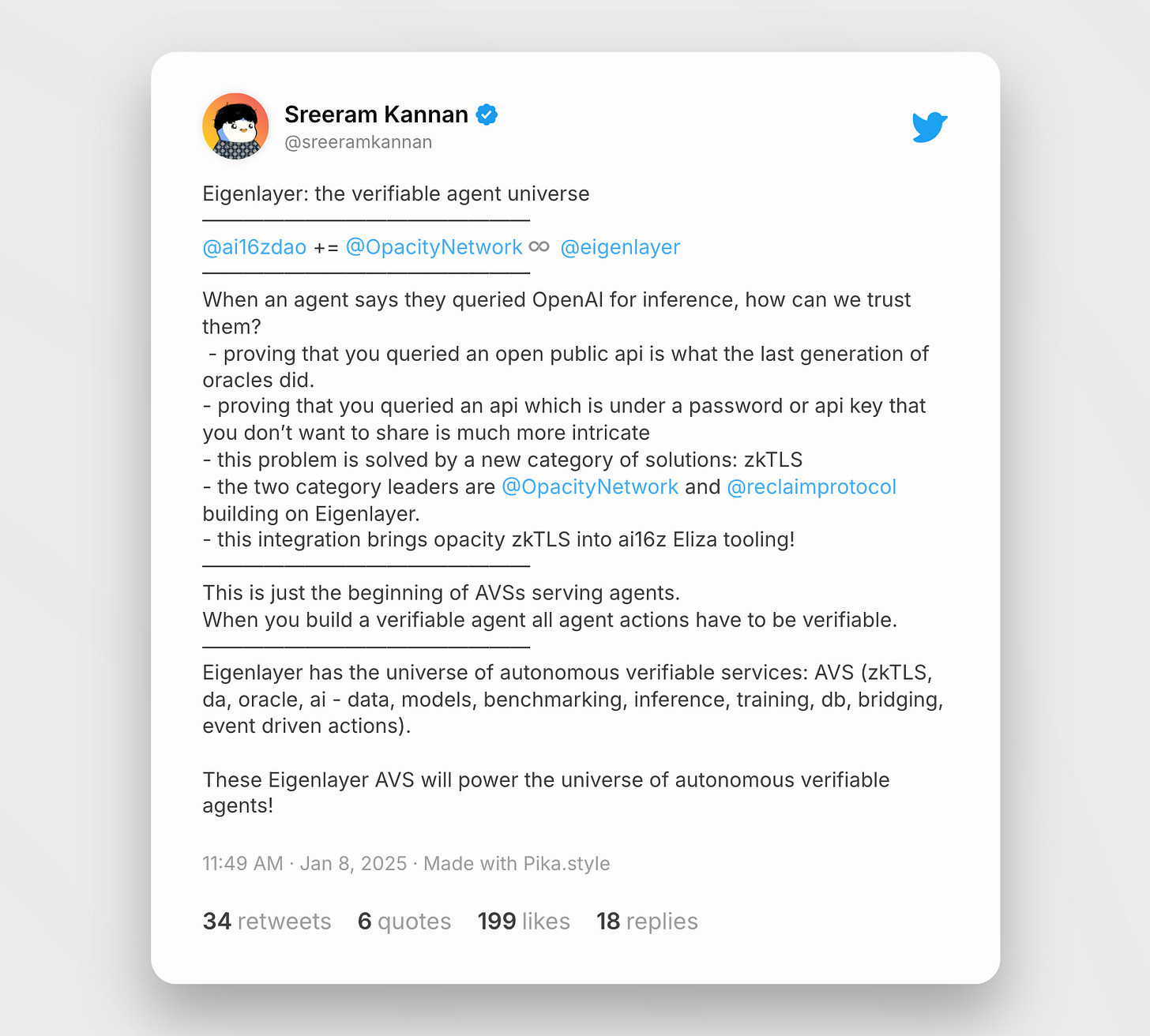

Sreeram explains how Opacity AVS helps with verifiability of the AI agents.

Gajesh’s demo on using Opacity AVS to build verifiable agent.

Othentic’s research on cross-agent task authorization and permission.

Big shout out on Arpa’s consistent rewards campaign.

Renzo announced support for Binance staked SOL.

Jito Restaking’s deposit is opening for a limited time on Tuesday, January 14th.

Food for Thought

Decentralization and verifiability are two completely different concepts.

We've always equated decentralization with the entirety of Web3, as the concept is deeply tied to PoW or PoS, where multiple nodes in a network work together to agree on a transaction.

Now, as zero-knowledge solutions become more prevalent, many of the new verifiable services do not need to be, or can't be decentralized, such as AI agents. It seems we might need to start getting used to the growing presence of verifiable services instead of thinking solely in terms of decentralization.

That's it for this week's newsletter! As always, feel free to send us a DM or comment directly below with your thoughts or questions.

If you want to catch up with the latest news in the restaking world, give our curated X list a follow!

See you next week and thanks for reading,